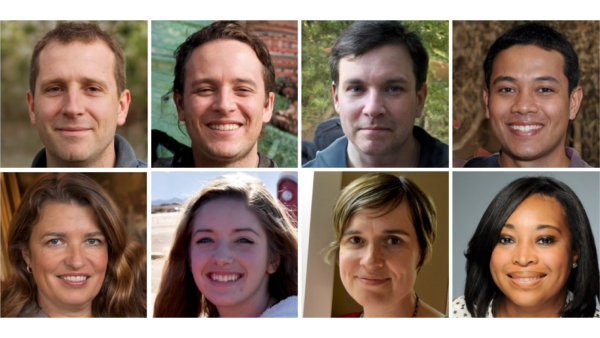

AI is capable of producing extraordinarily lifelike facial images (upper portion), which are challenging to differentiate from genuine photographs (lower portion).(Image credit: Gray et al, Royal Society Open Science 12250921 (2025) CC-BY-4.0)ShareShare by:

- Copy link

- X

Share this article 4Join the conversationFollow usAdd us as a preferred source on GoogleNewsletterSubscribe to our newsletter

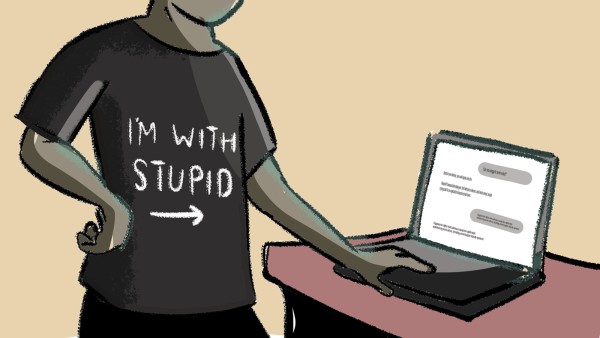

Synthetic faces created by artificial intelligence (AI) are now so authentic that even individuals with incredible abilities to remember faces, referred to as “super recognizers,” possess no more than a random chance of identifying fabricated faces.

Individuals with average face recognition skills perform even more poorly than chance: they are more inclined to believe that AI-generated faces are authentic.

You may like

-

Can you determine if a face was generated by AI or is authentic?

-

AI-generated voices can no longer be differentiated from real people’s voices

-

Some individuals embrace AI, while others detest it. Here is the reason.

According to Katie Gray, the primary author of the study and an associate professor of psychology at the University of Reading in the U.K., “I found it quite encouraging that our brief training method significantly increased performance in both groups.”

Gray indicated that surprisingly, the training enhanced accuracy similarly for both super recognizers and ordinary recognizers. Given that super recognizers are inherently better at spotting bogus faces, this implies they depend on other factors besides simply noticing rendering errors.

Gray hopes that scientists can capitalize on the detection abilities of super recognizers in order to spot AI-produced images more effectively.

“To optimally detect synthetic faces, a human-in-the-loop approach involving AI detection algorithms may be possible – with the human being a trained SR [super recognizer],” the study’s authors stated.

Detecting deepfakes

Lately, there has been a flood of AI-generated images online. Deepfake faces are made using a two-part AI process referred to as generative adversarial networks. Initially, a fake image gets generated utilizing real-world images, then the image is examined by a discriminator determining authenticity. Via constant repetition, the bogus images become convincing enough to deceive the discriminator.

These algorithms have grown so advanced that people often fall for the trick and regard fake faces as more “real” than genuine ones — an effect known as “hyperrealism.”

Therefore, researchers are putting efforts into creating educational programs to improve one’s capacity to detect AI faces. These courses emphasize common issues in AI-produced faces, such as an extra tooth in the center of the face, an odd-looking hairline, or skin that doesn’t look real. They also highlight that fake faces commonly exhibit superior proportionality compared to actual faces.

You may like

-

Can you determine if a face was generated by AI or is authentic?

-

AI-generated voices can no longer be differentiated from real people’s voices

-

Some individuals embrace AI, while others detest it. Here is the reason.

In principle, so-called super recognizers ought to be more skilled at recognizing fakes compared to the average individual. Super recognizers are individuals with excellent abilities in face perception and recognition, demonstrated by identifying whether two photographs of unfamiliar people portray the same person. However, only a few studies have explored their ability to detect fake faces or if training can improve their performance.

To compensate for this gap, Gray and her team performed a set of internet-based studies that compared the performance of super recognizers to that of average recognizers. The super recognizers were volunteers from the Greenwich Face and Voice Recognition Laboratory database, having scored in the top 2% on tasks that tested their ability to remember faces they had never seen before.

During the first experiment, an image of either a genuine or AI-generated face was shown on screen. Participants were given 10 seconds to determine whether the face was authentic or not. Super recognizers showed no improvement over a random guess, correctly identifying only 41% of AI faces. Average recognizers spotted just 30% of fakes.

The frequency with which real faces were misidentified as fake also differed among cohorts, occurring 39% of the time with super recognizers, and 46% with average recognizers.

The following experiment repeated the initial conditions but used a different collection of participants who went through a five-minute tutorial that presented examples of errors in AI-generated faces. They then assessed 10 faces and received immediate feedback regarding their accuracy in fake detection. The training concluded with a review of possible rendering errors. Following this, the participants repeated the initial assignment from the first experiment.

Training substantially boosted detection accuracy, as super recognizers accurately identified 64% of fake faces, while typical recognizers noticed 51%. The rate at which both groups mistakenly identified authentic faces as fake remained consistent with the first experiment; super recognizers identified real faces as “not real” around 37% of the time, and average recognizers, 49%.

RELATED STORIES

—Thanks to AI, images of historical figures such as Amelia Earhart and Marie Curie are now alive (and unsettling)

—AI listened to people’s voices, then created their faces

—The AI image of a cat riding a banana exists because children are digging for hazardous elements in the dirt. Is it really worth it?

Following training, participants tended to spend a greater amount of time scrutinizing the images, with average recognizers delaying by approximately 1.9 seconds, while super recognizers delayed by 1.2 seconds. According to Gray, this is an important point for anyone attempting to identify the authenticity of a face: take your time and carefully check the facial characteristics.

It should be noted, however, that the test was administered immediately following the training, so it is uncertain how durable the effect will be.

“The training can’t be seen as a long-lasting and efficient approach as it was not tested repeatedly,” wrote Meike Ramon, a professor of applied data science and an expert in face processing at the Bern University of Applied Sciences in Switzerland, in an early assessment of the study.

Additionally, given that distinct participant groups were involved in both experiments, it is unclear just how much training enhances an individual’s detection abilities, Ramon added. This would need testing the same group of participants on two occasions, both pre- and post-training.

Sophie BerdugoSocial Links NavigationStaff writer

Sophie is a staff writer based in the U.K. for Live Science. Her coverage spans numerous subjects, including reports on studies from bonobo communication to the initial water identified in the cosmos. She has also been featured in outlets like New Scientist, The Observer, and BBC Wildlife, and was nominated for the “Newcomer of the Year” award by the Association of British Science Writers in 2025 for her freelance work with New Scientist. Prior to entering science journalism, she earned a doctorate in evolutionary anthropology from the University of Oxford, where she researched the varying degrees of tool proficiency among chimpanzees for four years.

Show More Comments

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

Can you determine if a face was generated by AI or is authentic?

AI-generated voices can no longer be differentiated from real people’s voices

Some individuals embrace AI, while others detest it. Here is the reason.

The more that people use AI, the more likely they are to overestimate their own abilities

Switching off AI’s ability to lie makes it more likely to claim it’s conscious, eerie study finds

Experts divided over claim that Chinese hackers launched world-first AI-powered cyber attack — but that’s not what they’re really worried about

Latest in Psychology

Colors are universal — even if our perception of them is subjective