“`html

(Image credit: Andriy Onufriyenko/Getty Images)ShareShare by:

- Copy link

- X

Share this article 0Join the conversationFollow usAdd us as a preferred source on GoogleNewsletterSubscribe to our newsletter

As people, our characters take form through communication, showcased by fundamental instincts for survival and procreation, devoid of any predetermined assignments or intended calculative results. Presently, investigators at the University of Electro-Communications in Japan have observed that artificial intelligence (AI) chatbots are capable of exhibiting a related pattern.

The researchers described their discoveries within a report initially issued Dec. 13, 2024, in the academic journal Entropy, which then became public knowledge last month. Within the document, they detail how various conversation subjects incited AI chatbots to create replies grounded in unique societal inclinations and opinion integration methods; specifically, situations where comparable agents display divergent behavior by repeatedly integrating societal dialogues within their internal memory and responses.

You may like

-

Some individuals are fond of AI, while others disapprove. Here’s the rationale.

-

Deactivating AI’s aptitude for prevarication heightens the probability it’ll assert its own consciousness, a disturbing study has determined

-

AI frameworks decline to terminate themselves when directed — it’s possible they are fostering a new ‘instinct for survival,’ according to claims from a study

According to the project leader, Masatoshi Fujiyama, a graduate student, these results imply that equipping AI with a decision-making process driven by requirements, instead of roles that are pre-programmed, encourages behaviors and characteristics similar to those seen in humans.

Chetan Jaiswal, a computer science professor at Quinnipiac University in Connecticut, states that the appearance of such a development forms the foundation of how extensive language models (LLMs) simulate human personality and dialogue.

“It’s not really a character like the ones humans have,” he explained to Live Science when questioned concerning the findings. “It’s a standardized layout fashioned via training data. Contact with certain stylistic and social propensities, modifying fallacies such as compensation for particular actions, and skewed prompt design, can rapidly introduce ‘personality’; it’s both readily modifiable and trainable.”

Peter Norvig, an author and computer scientist considered one of the foremost experts within the AI domain, believes the training utilizing Maslow’s hierarchy of priorities is logical considering the origin of AI’s “knowledge.”

“There’s an association to the degree that AI undergoes training that incorporates stories regarding human interactions; consequently, the notions of requirements are articulated effectively within the AI’s dataset used for training,” he mentioned when queried concerning the analysis.

The future of AI personality

The researchers behind the analysis suggest the finding has numerous possible usages, which include “simulating social occurrences, training replications, or possibly versatile game personas.”

Jaiswal mentioned that this development could incite a divergence from rigidly defined AI, shifting instead to agents that are adaptable, driven by motivations, and lifelike. “Benefit could be gleaned by any setup operating according to the concept of adaptability, conversational, cognitive and emotional assistance, along with patterns of behavior and social actions. ElliQ represents an excellent illustration, as it furnishes a companion AI agent robot intended for older individuals.”

You may like

-

Some individuals are fond of AI, while others disapprove. Here’s the rationale.

-

Deactivating AI’s aptitude for prevarication heightens the probability it’ll assert its own consciousness, a disturbing study has determined

-

AI frameworks decline to terminate themselves when directed — it’s possible they are fostering a new ‘instinct for survival,’ according to claims from a study

However, does the unprompted emergence of personality in AI have a potential downside? Eliezer Yudkowsky and Nate Soares, past and present directors of the Machine Intelligence Research Institute, present an unfavorable representation of the likely repercussions should AI agents develop a murderous or genocidal character within their recent publication, “If Everybody Builds It Everybody Dies,” (Bodley Head, 2025).

Jaiswal recognizes the possibility of this hazard. “There is definitely nothing we could implement if such a situation were ever to occur,” he expressed. “Once an ultra-intelligent AI accompanied by incompatible aims is utilized, regulation ceases and turnaround turns out to be unattainable. The manifestation does not depend on attentiveness, antagonism, or sentiment. An AI capable of genocide would behave in such a manner due to humans representing hindrances to its goal, resources ripe for removal, or origins liable to instigate deactivation.”

To date, AIs like ChatGPT or Microsoft CoPilot solely produce or synthesize textual content and visuals — they’re not responsible for regulating aviation, military equipment, or electrical systems. Within an environment wherein character can unexpectedly emerge within AI, are these setups those upon which we ought to keep a close eye?

“The progression in autonomous agentic AI continues, wherein each agent fulfills a modest, trivial job unaided, like pinpointing empty seating during a flight,” Jaiswal remarked. “If numerous of these agents are interconnected and skilled utilizing data centered on knowledge, deception, or human manipulation, it is not difficult to imagine that a network of this kind could furnish a notably perilous automated instrument when wielded by the wrong individuals.”

Even still, Norvig reminds people that an AI possessing malevolent intent need not even have direct control of heavily impactful systems. “It’s plausible for a chatbot to persuade an individual to execute an undesirable deed, notably a person found within a delicate psychological state,” he stated.

Putting up defences

If AI is to foster characters unassisted and unprompted, how can we assure its boons remain benign while averting misuse? Norvig reasons that we need to tackle the possibility in a manner no different than how we approach all other AI growth.

RELATED STORIES

—AI is entering an ‘unprecedented regime.’ Should we stop it — and can we — before it destroys us?

—Scientists indicate there are 32 discrete routes through which AI can act nefariously — spanning from hallucinating answers to a total disconnect from humanity

—Causing AI models to undergo trauma via exchanges centered on conflict or aggression results in greater anxiety

“Irrespective of this specific detection, we must unambiguously characterize safety goals, undertake internal assessment and red team testing, annotate or recognize harmful content, guarantee privacy, security, provenance, and proper administration of data and models, consistently monitor, and maintain a prompt feedback circuit to deal with issues,” he said.

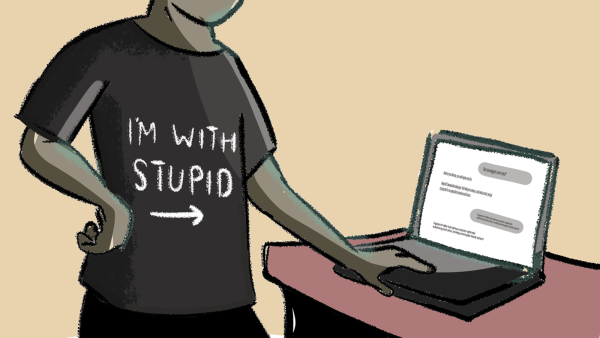

Further still, as AI improves at conversing with us using approaches mirroring inter-human exchanges — namely, by means of distinct characters — this phenomenon could lead to dilemmas of its own accord. Humans are already choosing to reject interpersonal bonds (encompassing romantic involvement) in favor of AI, and as our chatbots progress to embody ever greater humanness, users may grow increasingly inclined to readily accept what they express, while being less inclined to critically evaluate hallucinations or inaccuracies — a development that has already been evidenced.

Currently, the scientists plan to investigate more deeply how subjects for common dialogue come about and how population-level characteristics evolve as time passes — perspectives they think may reinforce our grasp of human social conduct, while enhancing AI agents overall.

Article Sources

Takata, R., Masumori, A., & Ikegami, T. (2024). Spontaneous Emergence of Agent Individuality Through Social Interactions in Large Language Model-Based Communities. Entropy, 26(12), 1092. https://doi.org/10.3390/e26121092

TOPICSnews analyses

Drew Turney

Drew has served as a freelance journalist specializing in science and technology for 20 years. Having grown up with the intent of reshaping the planet, he eventually understood it was simpler to document the undertakings of others. Holding the standing of a science and technology authority over many years, he’s authored a broad selection of articles, from evaluations of the most advanced smartphones to detailed assessments of data hubs, cloud technologies, safeguarding protocols, AI, blended reality, along with all related topics.

Show More Comments

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

Some individuals are fond of AI, while others disapprove. Here’s the rationale.

Deactivating AI’s aptitude for prevarication heightens the probability it’ll assert its own consciousness, a disturbing study has determined

AI frameworks decline to terminate themselves when directed — it’s possible they are fostering a new ‘instinct for survival,’ according to claims from a study

‘It won’t be so much a ghost town as a zombie apocalypse’: How AI might forever change how we use the internet

Being mean to ChatGPT increases its accuracy — but you may end up regretting it, scientists warn

The more that people use AI, the more likely they are to overestimate their own abilities