“`html

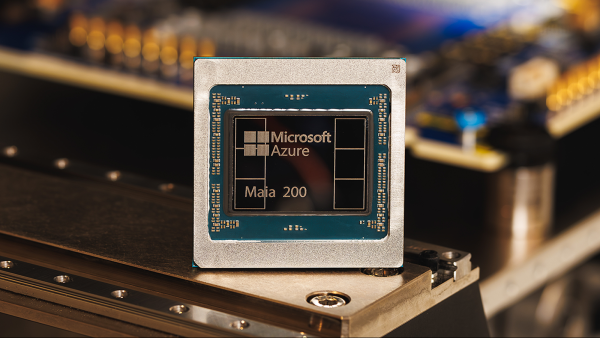

Microsoft’s Maia 200 silicon is being incorporated into its Azure cloud system(Image credit: Microsoft)ShareShare by:

- Copy link

- X

Share this article 0Join the conversationFollow usAdd us as a preferred source on GoogleNewsletterSubscribe to our newsletter

Microsoft has unveiled its latest Maia 200 acceleration silicon for artificial intelligence (AI) which is stated to be three times more potent compared to hardware from competitors like Google and Amazon, according to enterprise representatives.

This recent silicon will find use in AI inference rather than training, supporting platforms and tools utilized for creating forecasts, giving answers to questions, and producing results based on fresh data provided to them.

You may like

-

China solves ‘century-old problem’ with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

-

Scientists say they’ve eliminated a major AI bottleneck — now they can process calculations ‘at the speed of light’

-

Scientists create world’s first microwave-powered computer chip — it’s much faster and consumes less power than conventional CPUs

The innovative silicon delivers a performance exceeding 10 petaflops (1015 floating point operations each second), as stated by Scott Guthrie, executive vice president in the cloud and AI sector at Microsoft, in a blog entry. This serves as an evaluation of processing power in supercomputing, where the top-tier supercomputers worldwide are capable of reaching in excess of 1,000 petaflops of power.

The recent silicon attained this performance metric within a data representation category recognized as “4-bit precision (FP4)” – a profoundly compressed structure created to boost AI processing speed. Maia 200 furthermore produces 5 PFLOPS of performance with 8-bit precision (FP8). The variation between them lies in the fact that FP4 presents greater energy efficiency, but reduced accuracy.

“In practical application, an individual Maia 200 node is readily able to operate today’s most extensive models, with sufficient capacity for even bigger models going forward,” Guthrie shared in the blog entry. “This indicates Maia 200 exhibits 3 times the FP4 effectiveness of the third generation Amazon Trainium, as well as FP8 effectiveness that surpasses Google’s seventh generation TPU.”

Chips ahoy

Maia 200 could potentially find application in specialized AI operations, such as functioning with enhanced LLMs going forward. To date, Microsoft’s Maia silicons have only been implemented within the Azure cloud infrastructure for operating extensive operations for Microsoft’s individual AI offerings, notably Copilot. However, Guthrie mentioned there would be “broader customer accessibility in the times ahead,” indicating other organizations might gain entry to Maia 200 via the Azure cloud, or the silicons may potentially someday get utilized in independent data hubs or server structures.

Guthrie indicated that Microsoft offers 30% superior effectiveness per dollar versus current systems thanks to the use of the 3-nanometer approach created by the Taiwan Semiconductor Manufacturing Company (TSMC), the foremost manufacturer globally, enabling 100 billion transistors per silicon. This primarily conveys that Maia 200 could offer greater cost-effectiveness and efficiency for the most demanding AI operations than present-day silicons.

Maia 200 possesses several supplemental features besides superior effectiveness and efficiency. It includes a memory design, as an example, that offers aid to maintaining an AI model’s weights and data local, implying you would need a reduced amount of hardware to run a model. Furthermore, it is intended to be rapidly incorporated into existing data hubs.

Maia 200 must empower AI models to function more rapidly and proficiently. This means Azure OpenAI users, which include scientists, developers and corporations, might observe enhanced throughput and velocity as they develop AI applications and implement tools like GPT-4 within their operations.

RELATED STORIES

—’It won’t be so much a ghost town as a zombie apocalypse’: How AI might forever change how we use the internet

— GPT-4 has passed the Turing test, researchers claim

—The more advanced AI models get, the better they are at deceiving us — they even know when they’re being tested

This forthcoming generation AI hardware isn’t anticipated to affect ordinary AI and chatbot utilization for the majority of people in the short term, given that Maia 200 is created for data hubs rather than consumer-level hardware. However, end users could recognize the impact of Maia 200 as enhanced responsiveness and possibly advanced capabilities coming from Copilot and alternate AI tools integrated within Windows and Microsoft products.

Maia 200 could also deliver a processing enhancement to developers and scientists utilizing AI inference via Microsoft’s platforms. This, consequently, could trigger enhancements within AI deployment onto large-scale research projects and elements such as sophisticated weather simulation, biological or chemical mechanisms and configurations.

Roland Moore-ColyerSocial Links Navigation

Roland Moore-Colyer serves as a freelance writer for Live Science, in addition to managing editor at consumer tech publication TechRadar, overseeing the Mobile Computing segment. Within TechRadar, one of the leading consumer technology sites located in both the U.K. and U.S., he concentrates on smartphones and tablets. However, in addition to that, he incorporates more than a decade’s worth of writing proficiency to provide individuals with narratives spanning electric vehicles (EVs), the progression and practical implementation of artificial intelligence (AI), mixed reality offerings and applications, including the development of computing, viewed both on a macro scale and from a consumer perspective.

Show More Comments

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

China solves ‘century-old problem’ with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

Scientists say they’ve eliminated a major AI bottleneck — now they can process calculations ‘at the speed of light’

Scientists create world’s first microwave-powered computer chip — it’s much faster and consumes less power than conventional CPUs

Tapping into new ‘probabilistic computing’ paradigm can make AI chips use much less power, scientists say

MIT’s chip stacking breakthrough could cut energy use in power-hungry AI processes

‘Putting the servers in orbit is a stupid idea’: Could data centers in space help avoid an AI energy crisis? Experts are torn.

Latest in Artificial Intelligence

AI can develop ‘personality’ spontaneously with minimal prompting, research shows. What does that mean for how we use it?

Indigenous TikTok star ‘Bush Legend’ is actually AI-generated, leading to accusations of ‘digital blackface’

Even AI has trouble figuring out if text was written by AI — here’s why