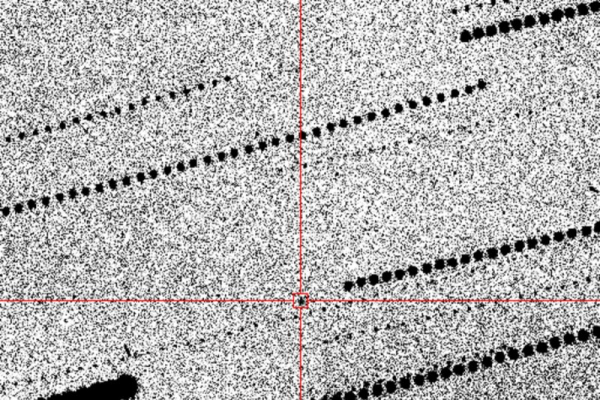

IBM has announced its plans to build Starling, the world's first fault-tolerant quantum computer, by 2029. (Image courtesy of IBM)

IBM says it has overcome a major hurdle in quantum computing and aims to launch the world's first scalable, fault-tolerant machine by 2029.

New research demonstrates innovative approaches to error correction that scientists believe could create a system 20,000 times more powerful than any existing quantum computer today.

In two new studies posted on the preprint server arXiv on June 2 and 3, the researchers presented new error mitigation and correction techniques that effectively address these issues and allow hardware to scale up to nine times more efficiently than previously possible.

You may like

- Researchers Say New Test Will Tell When Quantum Computers Will Beat Fastest Supercomputers

- 'Squeezing' qubits inspired by Schrödinger's cat could lead to more reliable quantum computing

- Scientists have connected two quantum processors using existing fiber optic cables

The new system, called Starling, will include 200 logical qubits, which is about 10,000 physical qubits. It will be followed by a machine called Blue Jay, which will use 2,000 logical qubits, in 2033.

A new study, which has not yet been peer-reviewed, describes IBM's low-density parity-check (LDPC) quantum codes, a new fault-tolerance concept that researchers say will allow quantum computers to scale beyond previous limitations.

“The scientific problems have been solved” for advanced, fault-tolerant quantum computing, Jay Gambetta, IBM’s vice president of quantum operations, told Live Science. That means scaling quantum computers is now just an engineering challenge, not a scientific hurdle, Gambetta added.

Although quantum computers already exist, they can only beat classical computer systems (which use binary computations) when solving specific problems designed solely to demonstrate their potential.

One of the major obstacles to quantum supremacy or quantum advantage is the scaling of quantum processing units (QPUs).

As the number of qubits in processors increases, errors in the calculations performed by QPUs accumulate. This is because qubits are inherently “noisy,” and errors occur more frequently than in classical bits. Therefore, research in this area has largely focused on quantum error correction (QEC).

The Path to Resilience

Error correction is a fundamental task for all computing systems. In classical computers, binary bits can randomly flip from one to zero and vice versa. These errors can complicate calculations and make them incomplete or even cause them to fail completely.

Qubits used for quantum computing are much more error-prone than their classical counterparts due to the added complexity of quantum mechanics. Unlike binary bits, qubits carry additional “phase information.”

While this allows them to perform calculations using quantum information, it also makes the task of error correction much more difficult.

Sourse: www.livescience.com