As researchers employ AI to engineer novel viruses, specialists are probing whether present-day biosecurity protocols are adequate to address this emerging hazard.(Image credit: Andriy Onufriyenko via Getty Images)

Scientists have utilized artificial intelligence (AI) for the construction of entirely new viruses, potentially ushering in the age of AI-generated living organisms.

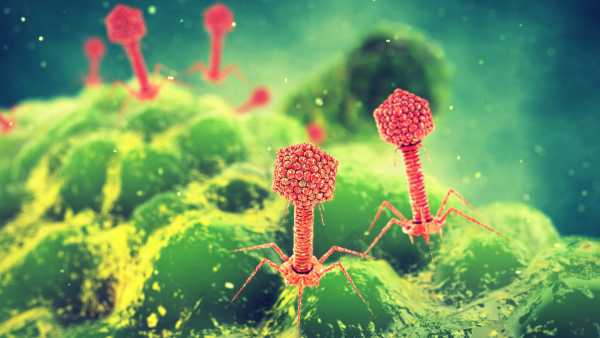

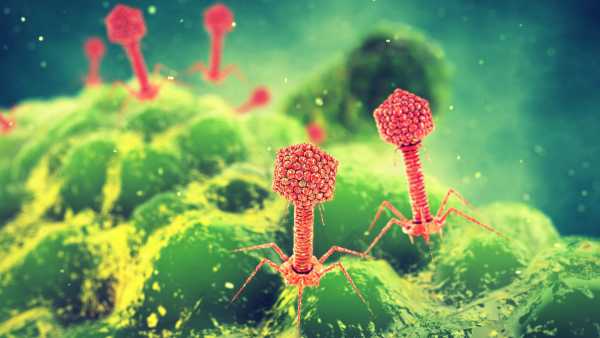

These viruses possess unique characteristics that may allow them to be categorized as distinct species. Known as bacteriophages, they target bacteria rather than humans. The study’s authors took deliberate actions to guarantee that their models could not synthesize viruses able to infect humans, animals, or plants.

You may like

-

AI could exploit online pictures as a secret entry point into your computer, according to an unsettling new analysis

-

Leading AI scientists caution that AI could soon reason in ways that are beyond our grasp — eluding our endeavors to maintain its alignment

-

Investigating the virus-bacteria competition to discover how we might conquer superbugs

In a study featured in the journal Science on Thursday (Oct. 2), Microsoft’s researchers reported that AI is able to outsmart security protocols designed to prevent malevolent individuals from ordering harmful molecules from vendors, for instance.

Following the discovery of this weakness, the research group acted swiftly to develop software solutions that substantially diminish the danger. Currently, this software requires specialized knowledge and access to unique resources not readily available to the general public.

Together, these recent studies emphasize the possibility that AI could conceive a novel organism or bioweapon capable of endangering humans — potentially setting off a pandemic in the direst scenario. At present, AI is not capable of this. Nonetheless, experts suggest that a reality in which it could isn’t too distant.

Specialists recommend establishing multi-layered security systems, enhanced screening technologies, and evolving rules overseeing AI-driven biological synthesis to keep AI from posing a threat.

The dual-use problem

A core dilemma of AI-designed viruses, proteins, and other biological products lies in the “dual-use problem.” This relates to any technology or study that offers potential advantages, yet also carries the risk of intentional misuse to cause harm.

A scientist exploring infectious diseases might choose to genetically alter a virus to understand what enhances its transmissibility. Conversely, one seeking to initiate the next pandemic could leverage the same research to design an ideal pathogen. Studies in aerosol drug delivery can aid asthma patients by leading to more effective inhalers, but the very same designs could also be employed for the distribution of chemical weapons.

Stanford doctoral candidate Sam King and his mentor, Brian Hie, an assistant professor of chemical engineering, recognized this ambiguity. Their aim was to construct entirely new bacteriophages — or “phages,” for short — capable of targeting and eradicating bacteria in infected patients. Their endeavors were detailed in a preprint publication shared on the bioRxiv database in September, which is still awaiting peer review.

You may like

-

AI could exploit online pictures as a secret entry point into your computer, according to an unsettling new analysis

-

Leading AI scientists caution that AI could soon reason in ways that are beyond our grasp — eluding our endeavors to maintain its alignment

-

Investigating the virus-bacteria competition to discover how we might conquer superbugs

Phages consume bacteria, and those bacteriophages which scientists have taken from the environment and nurtured in labs are presently being evaluated as potential complements or substitutes for antibiotics. This holds potential for resolving antibiotic resistance and preserving human lives. Phages are, however, viruses; as some viruses are hazardous to humans, there existed a hypothetical chance that the team might unintentionally produce a virus that posed harm to individuals.

Acknowledging this risk, the researchers took steps to mitigate it. They verified that their AI models were not trained utilizing viruses known to infect humans or other eukaryotes — the branch of life that encompasses plants, animals, and everything outside of bacteria or archaea. They then assessed the models to confirm they couldn’t independently devise viruses akin to those capable of infecting plants or animals.

Having instituted these protective measures, they directed the AI to base its designs on a phage already broadly utilized in laboratory settings. King posited that anyone aiming to develop a lethal virus would likely find it simpler to employ time-tested, conventional techniques.

“The current condition of this approach is one of considerable difficulty, necessitating substantial knowledge and duration,” King communicated to Live Science. “We hold that this does not presently reduce the obstacles associated with more perilous applications.”

Centering security

However, within a rapidly progressing sector, such preventative strategies are conceived in real time, and there’s a lack of clarity regarding the final composition of adequate safety standards. Researchers posit that regulations must strike an equilibrium between the hazards of AI-facilitated biology and its potential rewards. Furthermore, researchers will need to foresee how AI models could sidestep the barriers established to constrain them.

“These models exhibit intelligence,” acknowledged Tina Hernandez-Boussard, a professor of medicine at the Stanford University School of Medicine, who advised on safety for the AI models used in viral sequence benchmarks within the new preprint study. “One must remember that these models are constructed to attain peak performance; therefore, given training data, they can override safeguards.”

Carefully considering what to incorporate and omit from the AI’s learning material represents a fundamental factor that can preempt numerous security concerns later on, she observed. In the phage study, the researchers removed information on viruses that infect eukaryotes from the model. They also executed tests to ensure the models couldn’t independently ascertain genetic arrangements that might render their bacteriophages dangerous to humans — and the models were unable to do so.

An additional layer of the AI safety framework involves translating the AI’s conceptualization — a series of genetic directives — into a functioning protein, virus, or other biological output. Numerous leading biotech supply firms employ software to ensure their clientele do not place orders for harmful molecules, although such screening is conducted on a voluntary basis.

In their new study, however, Microsoft researchers Eric Horvitz, the company’s chief science officer, and Bruce Wittman, a senior applied scientist, discovered that AI designs could mislead current screening software. These programs evaluate genetic arrangements contained within an order, comparing them against genetic arrangements recognized for producing toxic proteins. However, AI can generate exceptionally different genetic arrangements expected to encode identical toxic functions. Subsequently, these AI-generated arrangements might not necessarily trigger a software alert.

There was a palpable tension among peer reviewers.

Eric Horvitz, Microsoft

The researchers adopted a cybersecurity technique to communicate this concern to reliable experts and professional associations, initiating a collaboration to resolve the software issues. “Months later, software fixes were implemented globally to fortify biosecurity screening,” Horvitz stated at a Sept. 30 press conference.

These fixes lessened the risk; however, across four frequently used screening instruments, an average of 3% of potentially perilous gene arrangements continued to slip through, reported Horvitz and his associates. The researchers also had to consider security in the act of publishing their results. Scientific publications are intended to be reproducible, implying that other researchers possess adequate data to validate the discoveries. However, disclosing every piece of data related to arrangements and software could educate malevolent actors on methods to bypass the security enhancements.

“There was a palpable tension among peer reviewers concerning the manner in which we should proceed,” Horvitz recalled.

The team eventually settled on a tiered access framework whereby researchers intending to examine sensitive data would apply to the International Biosecurity and Biosafety Initiative for Science (IBBIS), which would function as an impartial third party in assessing the request. Microsoft has founded an endowment to fund this service and provide a location for the data.

Tessa Alexanian, the technical lead at Common Mechanism, a genetic sequence screening instrument provided by IBBIS, stated that it’s the first instance of a top science journal endorsing this kind of data sharing method. “This managed access program constitutes an experiment, and we’re highly motivated to refine our methodology,” she added.

What else can be done?

Current regulations concerning AI tools are sparse. The screenings examined in the recent Science article are conducted voluntarily. Furthermore, devices capable of constructing proteins directly in a lab exist, thus obviating the need for a third party — enabling a malevolent actor to harness AI for the design and construction of dangerous molecules without gatekeepers.

That being said, professional consortiums and governments alike are increasingly issuing guidance regarding biosecurity. By way of example, a 2023 presidential executive order in the U.S. requires a concentration on safety, encompassing “strong, dependable, repeatable, and standardized evaluations of AI systems,” along with policies and organizations to lessen risk. According to Diggans, the Trump Administration is developing a framework that would restrict federal research and development funding to companies that do not implement safety screenings.

“We’ve witnessed increased interest among policymakers in enacting incentives for screening,” Alexanian noted.

In the United Kingdom, a state-backed organization named the AI Security Institute aims to foster policies and benchmarks to lessen the risks posed by AI. The organization is providing funds for research projects that address safety and risk mitigation, covering the defense of AI systems against misuse, the mitigation of third-party attacks (like inserting tainted data into AI training systems), and the exploration of methods to preclude the exploitation of publicly available, open-use models for harmful purposes.

The encouraging factor is that as AI-designed genetic arrangements increase in complexity, screening instruments receive greater quantities of information to analyze. This signifies that comprehensive genome designs, such as King and Hie’s bacteriophages, would prove reasonably simple to screen for potential threats.

“In general, synthesis screening performs more effectively with a greater amount of information at its disposal,” Diggans stated. “Thus, on the genome scale, it yields abundant data.”

Microsoft is working alongside governmental bodies to devise methods for utilizing AI to detect AI wrongdoing. For instance, Horvitz noted that the company is actively exploring methods to filter through substantial volumes of sewage and air-quality data, with the goal of detecting indications of dangerous toxins, proteins, or viruses being manufactured. “I believe we’ll observe screening expanding beyond the singular location of nucleic acid [DNA] synthesis to encompass the entire ecosystem,” Alexanian predicted.

RELATED STORIES

—Humanity faces a ‘catastrophic’ future if we don’t regulate AI, ‘Godfather of AI’ Yoshua Bengio says

—Could bacteria-killing viruses ever prevent sexually transmitted infections?

—’Medicine needed an alternative’: How the ‘phage whisperer’ aims to replace antibiotics with viruses

Although AI may theoretically construct a completely new genome for a novel species of bacteria, archaea, or more complex organism, King explained that at present, AI lacks an accessible mechanism for translating those AI directives into a living organism within the lab. While dangers posed by AI-designed lifeforms are not immediate, they remain within the realm of possibility. In light of the novel horizons AI is poised to uncover shortly, Hernandez-Boussard stressed the necessity for creative approaches across the field.

“Funders, publishers, industry, academics — indeed, this multifaceted community — all have a responsibility to mandate these safety evaluations,” she concluded.

Stephanie PappasSocial Links NavigationLive Science Contributor

Stephanie Pappas serves as a contributing writer for Live Science, where she examines an array of subjects ranging from geoscience to archaeology, as well as the human brain and behavior. She formerly held the position of senior writer for Live Science, but now operates as a Denver, Colorado-based freelancer, regularly providing content to Scientific American and The Monitor, the monthly publication of the American Psychological Association. Stephanie earned a bachelor’s degree in psychology from the University of South Carolina and a graduate certificate in science communication from the University of California, Santa Cruz.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

AI could exploit online pictures as a secret entry point into your computer, according to an unsettling new analysis

Leading AI scientists caution that AI could soon reason in ways that are beyond our grasp — eluding our endeavors to maintain its alignment

Investigating the virus-bacteria competition to discover how we might conquer superbugs

AI models can send subliminal messages that teach other AIs to be ‘evil,’ study claims

OpenAI’s ChatGPT agent can control your PC to do tasks on your behalf — but how does it work and what’s the point?

Sourse: www.livescience.com