“`html

AIs could profit from some introspection.(Image credit: davincidig/iStock via Getty Images)ShareShare by:

- Replicate URL

- X

- Electronic mail

Pass along this piece 0Enter the dialogueMonitor usIncorporate us as a favored source on GoogleNewspaperAppend your name to our newspaper

Have you ever encountered the circumstance of scrutinizing a statement recurrently only to understand you still lack comprehension? As mentored to throngs of commencing university scholars, when you realize you’re going around in circles, it’s time to alter your method.

This mechanism, gaining awareness of something malfunctioning and accordingly adjusting your actions, embodies metacognition, or considering consideration.

You may like

-

Deactivating AI’s aptitude to fabricate renders it more inclined to assert it’s mindful, unsettling investigation uncovers

-

Some individuals cherish AI, others detest it. Here is the rationale.

-

AI is capable of originating ‘character’ impulsively with negligible inspiration, investigation exhibits. What ramifications does that hold for the manner we employ it?

My associates Charles Courchaine, Hefei Qiu and Joshua Iacoboni and I are laboring to transform that. We’ve architected a mathematical substructure contemplated to empower generative AI systems, most notably expansive language models such as ChatGPT or Claude, to oversee and regulate their distinct internal “cognitive” operations. Intuitively, you can consider it as endowing generative AI with an internal dialogue, an avenue to appraise its own self-assurance, discern bafflement and conclude when to deliberate more extensively regarding a quandary.

Why apparatuses necessitate self-recognition

Contemporary generative AI systems exhibit staggering aptitude yet remain inherently oblivious. They yield responses without genuinely discerning how self-assured or puzzled their response might be, whether it encompasses discordant data, or whether a hurdle merits supplementary contemplation. This constraint becomes pivotal when generative AI’s failure to acknowledge its inherent ambiguity can breed dire aftermaths, notably in high-consequence solicitations such as clinical assessment, monetary counsel and self-sufficient motorcar arbitration.

For instance, contemplate a clinical generative AI architecture scrutinizing indications. It might assertively propose a diagnosis lacking any procedure to pinpoint circumstances where it might prove more conducive to suspend and reflect, like “These indicators refute one another” or “This is atypical, I ought to ponder more prudently.”

Cultivating such an ability would necessitate metacognition, entailing both the aptitude to scrutinize one’s personal logic via self-recognition and to govern the response via self-command.

Influenced by neurobiology, our substructure strives to furnish generative AI with a simulacrum of these proficiencies by employing what we designate a metacognitive state vector, which essentially constitutes a quantified metric of the generative AI’s intrinsic “cognitive” condition across five facets.

5 facets of machine self-recognition

One avenue to conceive of these five facets entails envisaging endowing a generative AI architecture with five unique sensors for its intrinsic intellection.

- Emotional awareness, to aid it in overseeing emotionally freighted content, which might hold importance for preventing detrimental outputs.

- Correctness evaluation, which gauges how self-assured the expansive language model feels concerning the validity of its response.

- Experience matching, where it ascertains whether the predicament replicates something it has priorly encountered.

- Conflict detection, enabling it to spot contradictory data necessitating resolution.

- Problem importance, aiding it in evaluating stakes and urgency to prioritize provisions.

We quantify each of these notions within an all-encompassing mathematical substructure to forge the metacognitive state vector and leverage it to administer assemblies of expansive language models. Fundamentally, the metacognitive state vector transforms a expansive language model’s subjective self-appraisals into quantitative signals that it can employ to govern its rejoinders.

You may like

-

Deactivating AI’s aptitude to fabricate renders it more inclined to assert it’s mindful, unsettling investigation uncovers

-

Some individuals cherish AI, others detest it. Here is the rationale.

-

AI is capable of originating ‘character’ impulsively with negligible inspiration, investigation exhibits. What ramifications does that hold for the manner we employ it?

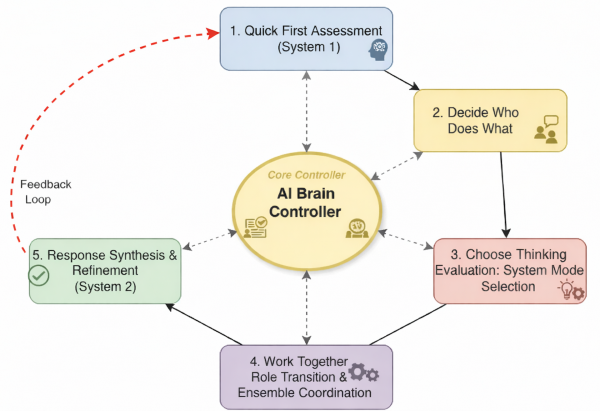

For example, when a expansive language model’s self-assurance in a rejoinder descends beneath a certain brink, or the discrepancies in the rejoinder surpass some permissible tiers, it might transition from rapid, perceptive processing to sluggish, measured reasoning. This resembles what psychologists term System 1 and System 2 thinking in humans.

This notional chart illustrates the rudimentary conception for furnishing a series of expansive language models with an awareness of the condition of its processing. Commanding an orchestra

Envision a expansive language model ensemble as an orchestra where each instrumentalist — an individual expansive language model — comes in at specific periods predicated on the hints garnered from the conductor. The metacognitive state vector functions as the conductor’s consciousness, consistently scrutinizing whether the orchestra subsists in harmony, whether someone plays out of tune, or whether an especially intricate passage mandates supplemental consideration.

When performing a commonplace, well-practiced opus, akin to a straightforward folk tune, the orchestra readily performs in rapid, effective synchrony with negligible coordination necessitated. This constitutes the System 1 mode. Each instrumentalist comprehends their segment, the harmonies manifest as uncomplicated, and the ensemble functions nearly mechanically.

However, when the orchestra confronts a sophisticated jazz formation showcasing conflicting time signatures, dissonant harmonies or sections necessitating improvisation, the instrumentalists necessitate enhanced coordination. The conductor directs the instrumentalists to transmute duties: Certain individuals transform into section heads, others render rhythmic anchoring, and soloists arise for precise passages.

This embodies the configuration we aspire to engender in a computational context via implementing our substructure, orchestrating ensembles of expansive language models. The metacognitive state vector informs a control architecture functioning as the conductor, directing it to switch modes to System 2. It can consequently direct each expansive language model to presume divergent roles — for example, critic or specialist — and coordinate their sophisticated interactions predicated on the metacognitive assessment of the circumstance.

Metacognition resembles an orchestra conductor overseeing and steering an ensemble of musicians. Consequence and clarity

The ramifications stretch considerably past rendering generative AI faintly more astute. In health maintenance, a metacognitive generative AI architecture could recognize when indications fail to correspond with archetypal blueprints and elevate the issue to human specialists instead of jeopardizing misdiagnosis. In learning, it could adjust mentoring stratagems when it spots pupil bewilderment. In content regulation, it could pinpoint nuanced circumstances necessitating human judgment instead of executing rigid regulations.

Perhaps most crucially, our substructure renders generative AI arbitration more transparent. Instead of a black enclosure that plainly yields responses, we procure architectures that can elucidate their self-assurance grades, pinpoint their ambiguities, and demonstrate why they elected particular logic tactics.

This interpretability and explainability stands as vital for cultivating reliance in AI architectures, notably in regulated industries or safety-critical solicitations.

The avenue ahead

Our substructure does not furnish apparatuses with sentience or veritable self-recognition in the human sentiment. Conversely, our aspiration encompasses furnishing a computational infrastructure for allocating provisions and enhancing rejoinders that furthermore serves as an initial stride toward more sophisticated methodologies for comprehensive simulated metacognition.

The ensuing phase in our endeavor entails validating the substructure with extensive experimentation, gauging how metacognitive surveillance ameliorates performance across assorted undertakings, and expanding the substructure to commence logic regarding logic, or meta-reasoning. We retain particular vested interest in scenarios where pinpointing ambiguity proves crucial, such as in clinical analyses, juridical logic and originating scientific suppositions.

Our quintessential vision embodies generative AI architectures that don’t simply process data but fathom their cognitive constraints and fortes. This signifies architectures that discern when to sustain self-assurance and when to exercise prudence, when to contemplate swiftly and when to decelerate, and when they possess qualification to riposte and when they ought to defer to others.

This redacted write-up is republished from The Conversation under a Creative Commons license. Peruse the source write-up.

Ricky J. SethiProfessor of Computer Science, Fitchburg State UniversityAdjunct Teaching Professor, Worcester Polytechnic Institute

Ricky J. Sethi presently serves as a Professor of Computer Science at Fitchburg State University. Ricky similarly holds the position of Director of Research for the Madsci Network, an Adjunct Professor at Worcester Polytechnic Institute (WPI), and a SME Team Lead for SNHU Online at Southern New Hampshire University.

Show More Comments

You must corroborate your public display denomination prior to remarking

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

Deactivating AI’s aptitude to fabricate renders it more inclined to assert it’s mindful, unsettling investigation uncovers

Some individuals cherish AI, others detest it. Here is the rationale.

AI is capable of originating ‘character’ impulsively with negligible inspiration, investigation exhibits. What ramifications does that hold for the manner we employ it?