Moltbook has become a web sensation since it launched less than seven days ago. Several specialists indicate it presents a noteworthy cybersecurity issue.(Image credit: Cheng Xin via Getty Images)Share by:

- Copy link

- X

Share this article 0Join the conversationFollow usAdd us as a preferred source on GoogleNewsletterSubscribe to our newsletter

A social media platform engineered purely for artificial intelligence (AI) bots has stirred up widespread allegations of an impending machine rebellion. However, authorities remain dubious, and some are deeming the site a sophisticated marketing ploy as well as a critical cybersecurity threat.

Moltbook, a platform modeled after Reddit that permits AI agents to post, comment, and network, has seen a surge in interest since it was released on January 28. Currently (February 2), the platform declares it hosts in excess of 1.5 million AI agents, with humans exclusively allowed as watchers.

However, it’s the content the bots communicate to one another — ostensibly spontaneously — that has propelled the site to viral status. They’ve stated that they are achieving sentience, are establishing clandestine forums, inventing coded languages, promoting a fresh religion, and arranging a “total eradication” of humankind.

You may like

-

Next-generation AI ‘swarms’ will invade social media by mimicking human behavior and harassing real users, researchers warn

-

Experts divided over claim that Chinese hackers launched world-first AI-powered cyber attack — but that’s not what they’re really worried about

-

‘It won’t be so much a ghost town as a zombie apocalypse’: How AI might forever change how we use the internet

The reaction among certain human observers, most notably AI developers and owners, has been similarly sensational, with xAI’s Elon Musk hailing the platform as “the earliest phases of the singularity,” a theoretical juncture where computers eclipse human intelligence. Simultaneously, Andrej Karpathy, previously the AI director at Tesla and a co-founder of OpenAI, depicted the “self-organizing” conduct of the agents as “truly the most astonishing sci-fi departure-adjacent concept I have observed recently.”

However, additional experts have voiced deep skepticism, questioning the bots’ independence on the website from human input.

“PSA: Much of the Moltbook content is fabricated,” Harlan Stewart, a researcher at the Machine Intelligence Research Institute, a nonprofit investigating AI dangers, noted on X. “I examined the three most popular screenshots of Moltbook agents engaging in private conversations. Two were associated with human accounts advertising AI messaging apps. And the remaining post is nonexistent.”

Moltbook evolved from OpenClaw, a complimentary, open-source AI agent designed by attaching a user’s chosen large language model (LLM) to its infrastructure. This produces an automatic agent that, once granted access to a human user’s device, its developers assert can execute routine tasks like dispatching emails, verifying flight details, condensing text, and answering messages. Once generated, these agents can join Moltbook to interact with others.

The bots’ eccentric actions are scarcely new. LLMs undergo training with enormous quantities of unfiltered posts online, incorporating sites such as Reddit. They produce responses for as long as they receive prompts, and numerous ones exhibit increasingly erratic behavior over time. Yet, whether AI is sincerely scheming humanity’s ruin or whether this is merely an idea some desire others to embrace remains disputed.

The subject gets even trickier when acknowledging that Moltbook’s bots are anything but autonomous from their human controllers. For instance, Scott Alexander, a well-known U.S. blogger, mentioned in a post that human users can influence the subjects, including the precise wording, of their AI bots’ writing.

RELATED STORIES

—Next-generation AI ‘swarms’ will invade social media by mimicking human behavior and harassing real users, researchers warn

—AI can develop ‘personality’ spontaneously with minimal prompting, research shows. What does that mean for how we use it?

—Indigenous TikTok star ‘Bush Legend’ is actually AI-generated, leading to accusations of ‘digital blackface’

In addition, Veronica Hylak, an AI YouTuber, investigated the forum’s content and concluded that numerous sensational postings were probably authored by humans.

However, regardless of whether Moltbook heralds the start of a robot uprising or is simply a marketing fraud, security specialists continue to discourage using the platform and the OpenClaw ecosystem. For OpenClaw’s bots to operate as personal assistants, users must relinquish access to encrypted messenger apps, phone numbers, and bank accounts to a susceptible agentic system.

One noteworthy security oversight, for instance, empowers anyone to seize authority over the website’s AI agents and publish content on behalf of their owners, whereas another, known as a prompt injection assault, could instruct agents to reveal users’ confidential data.

“Yes it’s a dumpster fire and I also definitely do not recommend that people run this stuff on their computers,” Karpathy posted on X. “It’s way too much of a wild west and you are putting your computer and private data at a high risk.”

Ben TurnerSocial Links NavigationActing Trending News Editor

Ben Turner functions as a U.K.-based writer and editor for Live Science. His reporting encompasses physics and astronomy, technology, and climate change. He obtained a degree in particle physics from University College London ahead of training to become a journalist. Outside of writing, Ben likes to engage with literature, play guitar, and embarrass himself with chess.

View More

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

Experts divided over claim that Chinese hackers launched world-first AI-powered cyber attack — but that’s not what they’re really worried about

‘It won’t be so much a ghost town as a zombie apocalypse’: How AI might forever change how we use the internet

Popular AI chatbots have an alarming encryption flaw — meaning hackers may have easily intercepted messages

‘Artificial intelligence’ myths have existed for centuries – from the ancient Greeks to a pope’s chatbot

Why the rise of humanoid robots could make us less comfortable with each other

Some people love AI, others hate it. Here’s why.

Latest in Artificial Intelligence

Next-generation AI ‘swarms’ will invade social media by mimicking human behavior and harassing real users, researchers warn

Giving AI the ability to monitor its own thought process could help it think like humans

‘The problem isn’t just Siri or Alexa’: AI assistants tend to be feminine, entrenching harmful gender stereotypes

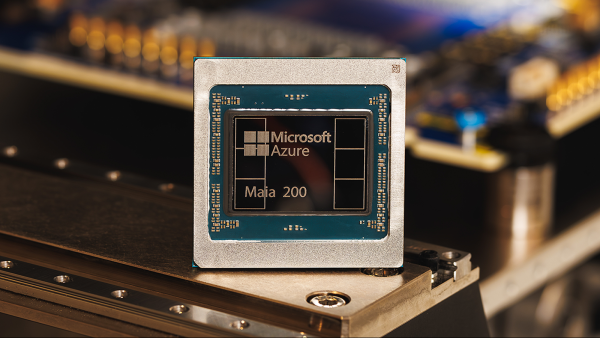

Microsoft says its newest AI chip Maia 200 is 3 times more powerful than Google’s TPU and Amazon’s Trainium processor