“`html

(Image credit: Sharamand/Getty Images)ShareShare by:

- Copy link

- X

Share this article 0Join the conversationFollow usAdd us as a preferred source on GoogleNewsletterSubscribe to our newsletter

Researchers from both the United States and Japan have leveraged a fresh sort of element in artificial intelligence (AI) processors that utilizes reduced power during the execution of complex calculations. The novel setup permits a greater quantity of operations to proceed simultaneously, enabling the processor to achieve the optimal result with greater efficiency.

Most machines depend on bits — the 0s and 1s that symbolize virtual data and that software employs to implement commands — although certain specialized innovations, such as neuromorphic processors, instead implement probabilistic bits (p-bits).

You may like

-

MIT’s chip stacking breakthrough could cut energy use in power-hungry AI processes

-

China solves ‘century-old problem’ with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

-

Scientists say they’ve eliminated a major AI bottleneck — now they can process calculations ‘at the speed of light’

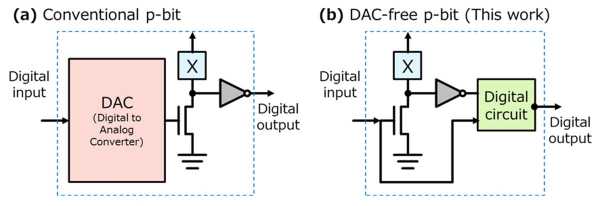

Even though the randomness of p-bits is valuable, programmers still need to regulate the recurrence of their production of a 0 or a 1 to steer their system toward improved answers. Consequently, the majority of p-bits are constructed with digital-to-analog converters (DACs), which utilize analog voltages to influence them in one direction or another. However, these components are cumbersome and consume a considerable amount of power.

“The dependence on analog signals was limiting advancement,” stated Shunsuke Fukami, a materials science professor and co-author of the study, in a declaration. “Consequently, we discovered a digital technique to modify the conduct of p-bits without the requirement for the typically employed large, unwieldy analog circuits.”

In place of DACs, the scientists engineered their p-bits using magnetic tunnel junctions (MTJs) — minute devices that inherently oscillate between 0 and 1 randomly — and direct this stream of bits into a localized digital circuit. Based on the duration the circuit pauses to combine these random 0s and 1s, along with its counting and weighting of each value, the resulting output p-bits can become primarily 0s or predominantly 1s.

The scientists shared their discoveries in a report presented on Dec. 10, 2025, at the 71st International Electron Devices Meeting held in San Francisco. The research was executed through collaboration with Taiwan Semiconductor Manufacturing Company (TSMC), the foremost semiconductor foundry in the world.

The circuit’s configurations can be modified by a user or program, enabling supervision of the extent to which the p-bit prefers one value. Significantly, given that this supervision is completely digital, it needs considerably reduced space and power on the processor compared to standard DACs.

Circuit diagrams of a conventional DAC-based p-bit (a) and the proposed DAC-free p-bit (b). Self-organizing behaviour adds to efficiency

An additional advantage of the innovative approach is that the p-bits can display “self-organizing” activity, according to the researchers. In the context of DACs, when a user determines a preference for predominantly 1s or 0s, an analog signal consistently biases the p-bits. They collectively sense this influence at the identical moment, engendering the possibility of them generating an output simultaneously.

Ideally, p-bit outputs would be produced in a staggered pattern, granting them the opportunity to interpret the outputs of prior p-bits and apply this data to conclude whether changing to 0 or 1 would be more advantageous for the overarching calculation.

Employing the novel setup, when the user fine-tunes the parameters for the intended bias, a digital signal is transmitted to each p-bit’s local control circuit. Because each circuit yields its subsequent output utilizing its own distinct timing, the p-bits naturally circumvent updating at the exact instant. The staggered outputs additionally empower multiple p-bits to function in tandem and explore diverse potential solutions at once, authorizing the processors to conduct calculations with greater efficiency.

RELATED STORIE

—China solves ‘century-old problem’ with new analog chip that is 1,000 times faster than high-end Nvidia GPUs

—World’s first light-powered neural processing units (NPUs) could massively reduce energy consumption in AI data centers

—’Crazy idea’ memory device could slash AI energy consumption by up to 2,500 times

Thus far, the financial burden of utilizing DACs has obstructed the mass manufacturing and implementation of p-bits in commercial AI hardware, however, this discovery could potentially alter that situation, as believed by the scientists. The efficiency merits may contribute to lessening the substantial ecological consequences of contemporary AI systems.

The team responsible for the MTJ-based p-bits has not yet released performance assessments in contrast to conventional DAC designs, implying that the commercial viability at this phase remains uncertain. Maintaining thermal stability and dependability while regulating switching current are recognized challenges for MTJs. All the same, the team holds an optimistic outlook that their energetic breakthrough will render probabilistic computing more obtainable across diverse domains, encompassing the resolution of routing complications in logistics and the rapid analysis of extensive solution arrays in scientific exploration.

Fiona Jackson

Fiona Jackson is a self-employed writer and editor chiefly focusing on science and tech topics. She has served as a news writer on the science section at MailOnline, while also covering business tech updates for TechRepublic, eWEEK, and TechHQ.

Fiona gained experience in crafting human interest pieces for international news entities at the SWNS press agency. She possesses a Master’s qualification in Chemistry, an NCTJ Diploma, and a cocker spaniel named Sully, with whom she resides in Bristol, UK.

Show More Comments

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

LogoutRead more

MIT’s chip stacking breakthrough could cut energy use in power-hungry AI processes

Scientists say they’ve eliminated a major AI bottleneck — now they can process calculations ‘at the speed of light’

Scientists create world’s first microwave-powered computer chip — it’s much faster and consumes less power than conventional CPUs

Scientists build ‘most accurate’ quantum computing chip ever thanks to new silicon-based computing architecture

New ‘physics shortcut’ lets laptops tackle quantum problems once reserved for supercomputers and AI

New semiconductor could allow classical and quantum computing on the same chip, thanks to superconductivity breakthrough

Latest in Computing

MIT’s chip stacking breakthrough could cut energy use in power-hungry AI processes

Scientists build ‘most accurate’ quantum computing chip ever thanks to new silicon-based computing architecture

This new DNA storage system can fit 10 billion songs in a liter of liquid — but challenges remain for the unusual storage